Introduction

This toy project was originally created for a guest lecture to a Data Science 101 course (and its quality may reflect that :) This post extends that lecture, designed to provide a high-level understanding and example of Retrieval Augmented Generation (RAG).

We’ll go through the steps of creating a RAG based LLM system, explaining what we’re doing along the way, and why.

You can follow along with the slides and code here

The CheeseGPT System

CheeseGPT combines Large Language Models (LLMs) with the advanced capabilities of Retrieval-Augmented Generation (RAG). At its core, CheeseGPT uses OpenAI’s GPT-4 model for natural language processing. This model serves as the backbone for generating human-like text responses. However, what sets CheeseGPT apart is its integration with Langchain and a Redis database containing all of the information on Wikipedia relating to cheese.

When a user asks a question, the system utilizes RAG to retrieve the most relevant information/documents from its vector database, and then includes those in its message to the LLM. This allows the LLM to have specific and up-to-date information to use, extending from the data that it was trained on.

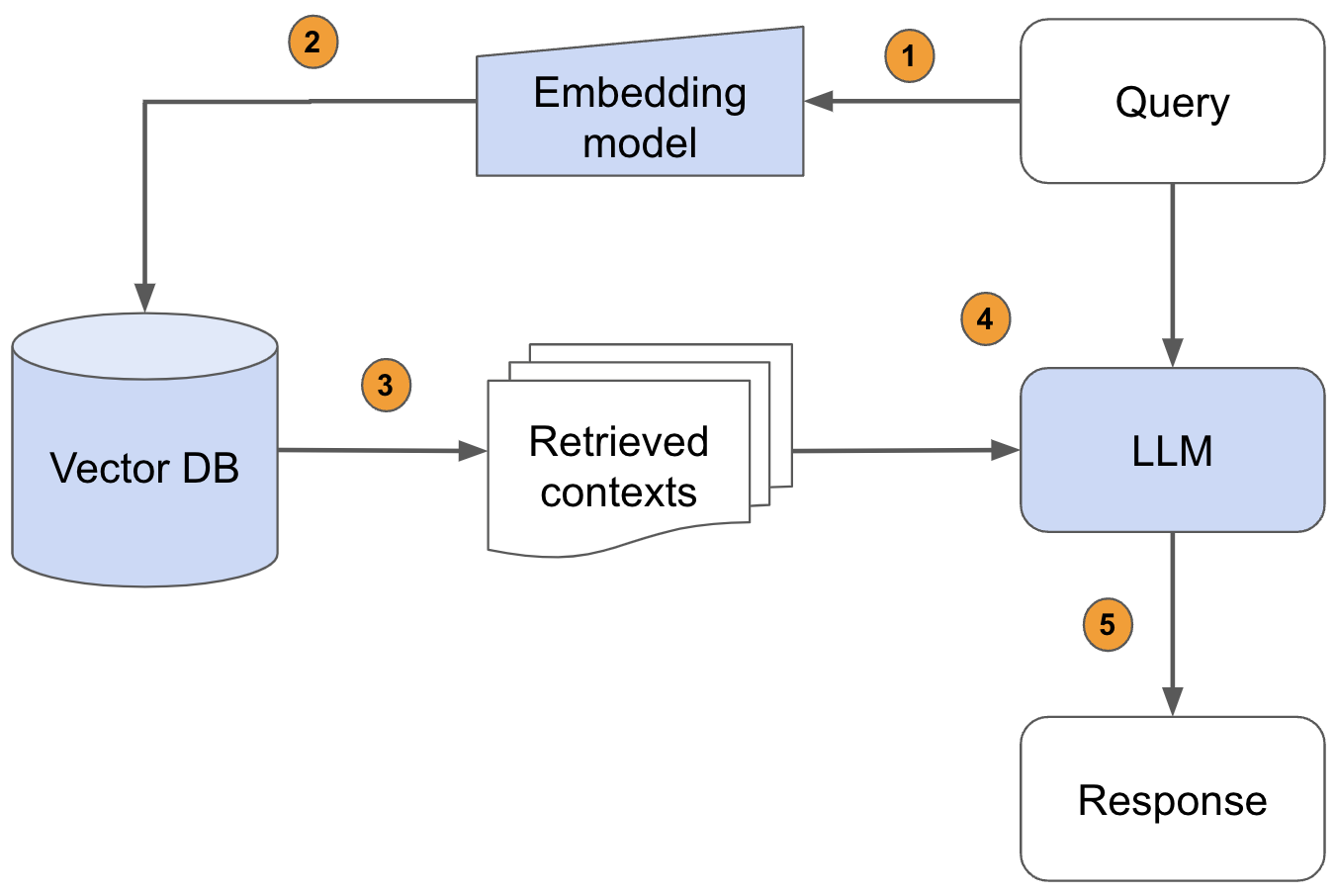

The image below, flow from right to left (steps 1-5) shows the high level design of this. The user’s query is passed into our embedding model. We do a similarity search against our database to retrieve the most relevant documents to our users question. And then these are included in context passed to our LLM.

https://www.anyscale.com/blog/a-comprehensive-guide-for-building-rag-based-llm-applications-part-1

Below, we’ll outline the steps to building this system.

NOTE: this is an example, and probably doesn’t make a ton of sense as a useful system. (For one, we’re getting our data from wikipedia, which is already contained within the training data of GPT-4) This is meant to be a high level example that can show how a RAG based system can work, and to show what the possibilities are when integrating external data with LLMs (proprietary data, industry specific technical docs, etc.)

Data Collection and Processing

As with most projects, getting and munging your data is one of the most time consuming yet crucial elements. For our CheeseGPT example, this involved scraping Wikipedia for cheese-related articles, generating embeddings, and storing them in a Redis database. Below, I’ll outline these steps with code snippets for clarity.

Scraping Wikipedia

We start by extracting content from Wikipedia. We made a recursive function get_page_content to fetch pages related

to cheese, including summaries and sections. (Note: This function could definitely be improved.)

1

2def get_page_content(page_title, depth, max_depth):

3 wiki_wiki = wikipediaapi.Wikipedia('MyCheeseRAGApp/1.0 (myemail@example.com)')

4 if depth > max_depth or page_title in visited_pages:

5 return [], []

6 visited_pages.add(page_title)

7

8 if len(visited_pages) % 10 == 0:

9 logger.info(f"Visited pages: {len(visited_pages)}")

10 logger.info(f"Fetching page '{page_title}' (depth={depth})")

11

12 try:

13 page = wiki_wiki.page(page_title)

14 if not page.exists():

15 return [], []

16

17 texts, metadata = [], []

18 if len(texts) % 100:

19 logger.info(f"Texts length {len(texts)}")

20

21 # Add page summary

22 texts.append(page.summary)

23 metadata.append({'title': page_title, 'section': 'Summary', 'url': page.fullurl})

24

25 # Add sections

26 for section in page.sections:

27 texts.append(section.text)

28 metadata.append({'title': page_title, 'section': section.title, 'url': page.fullurl})

29

30 # Recursive fetching for links

31 if depth < max_depth:

32 for link_title in page.links:

33 link_texts, link_metadata = get_page_content(link_title, depth + 1, max_depth)

34 if len(link_texts) > 0:

35 texts.extend(link_texts)

36 metadata.extend(link_metadata)

37

38 return texts, metadata

39 except Exception as e:

40 logger.error(f"Error fetching page '{page_title}': {e}")

41 return [], []

This is a very greedy (and lazy) approach. We don’t discriminate at all, and we end up with a ton of noise (things not related to cheese at all), but for our purposes of example, it works.

Generating Embeddings

Next, we need to generate our embeddings from our collected documents.

What are embeddings?

Embeddings are high-dimensional, continuous vector representations of text, words, or other types of data, where similar items have similar representations. They capture semantic relationships and features in a space where operations like distance or angle measurement can indicate similarity or dissimilarity.

In machine learning, embeddings are used to convert categorical, symbolic, or textual data into a form that algorithms can process more effectively, enabling tasks like natural language processing, recommendation systems, and more sophisticated pattern recognition.

With our textual data collected, we’ll be using OpenAI and Langchain to generate our embeddings. There are lots of different ways to generate embeddings (plenty packages that run locally, too), but using OpenAI API to get them is fast and easy for us. (and also dirt cheap)

NOTE: In a true production system, there would be much more consideration taken around generating embeddings. This is arguably the most important step in a RAG based system. We’d need to do experimentation with chunk size to see what gives us the best results. We’d need to explore our vectors to make sure their working as expected, remove noise, etc.

Generating embeddings using LangChain and OpenAI

Langchain makes it very easy to do create embeddings and store them in Redis without much thought, but this step requires extreme care to generate good results in a production system

The snippet below takes our scraped wikipedia sections, generates embeddings for them using OpenAI’s embeddings API, and stores them in Redis. Again, LangChain abstracts away a ton of complexity and makes this really easy for us.

1from langchain.embeddings import OpenAIEmbeddings

2

3embeddings = OpenAIEmbeddings()

4rds = Redis.from_texts(

5 texts,

6 embeddings,

7 metadatas=metadata,

8 redis_url="redis://localhost:6379",

9 index_name="cheese",

10)

Implementation of RAG

Our RAG operates by creating an embedding of the user’s question and then finding the most semantically similar documents in our database (via cosine similarity between the embedding of our user’s query and the N closest documents in our database).

We then include these documents / snippets in our request to the LLM, telling it that they are the most relevant documents based on a similarity search. The LLM can then use these documents as reference when generating its response.

Here’s a simplified overview of the process with code snippets:

Embedding User Queries

The user’s query is converted into an embedding using the OpenAI API. This embedding represents the semantic content of the query in a format that can be compared against the pre-computed embeddings of the database articles.

1from langchain.embeddings import OpenAIEmbeddings

2

3embeddings = OpenAIEmbeddings()

4query_embedding = embeddings.embed_text(user_query)

Retrieving Related Articles

We then use the query embedding to perform a similarity search in the Redis database. It retrieves a set number of articles that are most semantically similar to the query.

1def get_related_articles(query_embedding, k=3):

2 return rds.similarity_search(query_embedding, k=k)

Integrating Retrieved Data into GPT-4 Prompts

The retrieved articles are formatted and integrated into the prompt for GPT-4. This allows GPT-4 to use the information from these articles to generate a response that is not only contextually relevant but also rich in content.

1def create_prompt_with_articles(query, articles):

2 article_summaries = [f"{article['title']}: {article['summary']}" for article in articles]

3 return f"Question: {query}\n\nRelated Information:\n" + "\n".join(article_summaries)

Generating the Response

Finally, the enriched prompt is fed to GPT-4, which generates a response based on both the user’s query and the additional context provided by the retrieved articles.

1response = openai.ChatCompletion.create(

2model="gpt-4",

3messages=[{"role": "system", "content": create_prompt_with_articles(user_query, related_articles)}]

4)

Through this process, CheeseGPT effectively combines the generative power of GPT-4 with the information retrieval capabilities of RAG, resulting in responses that are informative, accurate, and contextually rich.

The Chat Interface

CheeseGPT’s chat interface is an important component, orchestrating the interaction between the user, the retrieval-augmented generation system, and the underlying Large Language Model (LLM).

For the purposes of our example, we have built the bindings for the interface, but did not create a fully interactive interface.

Let’s dive into the key functions that make this interaction possible.

Connecting to Redis

1def rds_connect():

2 rds = Redis.from_existing_index(

3 embeddings,

4 redis_url="redis://localhost:6379",

5 index_name="cheese",

6 schema="redis_schema.yaml",

7 )

8 return rds

This function establishes a connection to the Redis database, where the precomputed embeddings of cheese-related Wikipedia pages are stored.

Applying Filters

1def get_filters():

2 is_not_external_link = RedisFilter.text("section") != 'External Links'

3 is_not_see_also = RedisFilter.text("section") != 'See Also'

4 _filter = is_not_external_link & is_not_see_also

5 return _filter

Filters are applied to ensure that irrelevant sections like ‘External Links’ and ‘See Also’ are excluded from the search results.

Deduplicating Results

1def dedupe_results(results):

2 seen = set()

3 deduped_results = []

4 for result in results:

5 if result.page_content not in seen:

6 deduped_results.append(result)

7 seen.add(result.page_content)

8 return deduped_results

This function ensures that duplicate content from the search results is removed, enhancing the quality of the final output. (This is necessary in our case because we were greedy / lazy when pulling our data / generating our vectors)

Retrieving Document Results

1def get_results(rds, question, k=3):

2 _filters = get_filters()

3 results = dedupe_results(rds.similarity_search(question, k=k, filter=_filters))

4 return results

This key function performs a similarity search in the Redis database using the user’s query, filtered and deduplicated.

Formatting RAG Results

1def format_rag_results(rag_results):

2 divider = "*********************RESULT*********************\n"

3 return [f"{divider} {result.page_content} ({result.metadata['url']}#{result.metadata['section']})" for result in

4 rag_results]

The function formats the search results, making them readable and including the source information for transparency.

This is what our message looks like when we send it to GPT-4. Our system prompt is first and includes instructions for the model to use the retrieved documents when answering the question.

In our user message, you can see the user’s question, and then the documents we retrieved, presented as a list with some formatting.

[

{

"role": "system",

"content": "You are cheeseGPT, a retrieval augmented chatbot with expert knowledge of cheese. You are here to answer questions about cheese, and you should, when possible, cite your sources with the documents provided to you."

},

{

"role": "user",

"content": "User question: what is the biggest cheese sporting event. Retrieved documents: ['*********************RESULT*********************\\n The Lucerne Cheese Festival (German: Käsefest Luzern) is a cheese festival held annually in Lucerne, Switzerland. It was established in 2001 and is normally run on a weekend in the middle of October at the Kapellplatz (Chapel Square) in the city centre. The next festival is planned to take place on 14 October 2023.The event features the biggest cheese market in central Switzerland, and offers the greatest selection of cheeses. As well as the cheese market and live demonstrations of cheesemaking, typical events during the festival include a milking competition and music such as the Swiss alphorn.The 2012 event featured over 200 varieties of cheese over 23 market stalls, including goat and sheep cheese. The 2020 event was almost cancelled because of social distancing restrictions during the COVID-19 pandemic, but was approved a few days before with a strict requirement to wear masks. Instead of the Kapellplatz, the festival was run from the nearby Kurplatz (Spa Square). 288 variety of cheeses were available at the festival, including cheesemakers from outside the local region such as the Bernese Jura and Ticino, who had their own festivals cancelled. Around 5,800 people attended the festival, lower than the previous year, with around two-thirds fewer sales. The following year\\'s event continued restrictions, where customers had to taste and buy cheese at a distance, though masks were no longer mandatory. The 2022 event featured demonstrations of the cheese making process, a chalet built of Swiss cheese, and a \"cheese chalet\" hosting cheese fondue and raclette.India Times in 2014 called it out as one of 10 world food festivals for foodies. (https://en.wikipedia.org/wiki/Lucerne_Cheese_Festival#Summary)', '*********************RESULT*********************\\n In addition to sampling and purchasing more than 4,600 cheeses in the Cheese Pavilion, visitors to the show are treated to various attractions throughout the day including cheese making demonstrations, trophy presentations and live cookery demonstrations. (https://en.wikipedia.org/wiki/International_Cheese_Awards#Show features)', '*********************RESULT*********************\\n The first annual event was held in 2000 in Oxfordshire, and was founded by Juliet Harbutt. Each year it is preceded by the British Cheese Awards, a ceremony which Harbutt created in 1994, judged by food experts and farmers, in which the best cheeses are awarded bronze, silver and gold medals.\\nAll cheeses are tasted blind, and the winners can then display their awards during the public-attended festival. There are usually a variety of events at the festival such as seminars, masterclasses, and cheesemaking demonstrations.The event moved to Cheltenham in Gloucestershire in 2005. In 2006 the Sunday of the weekend was cancelled at great cost after the venue experienced flooding, and the decision was made to return to Oxfordshire in 2007.Early in 2008 the festival was sold to Cardiff Council; subsequently the event has been held in the grounds of Cardiff Castle in 2008, 2009, 2010, and 2011.The 2012 Great British Cheese Festival was held at Cardiff Castle on Saturday, September 22, and Sunday, September 23.\\nIn 2015, Harbutt returned to her native New Zealand. (https://en.wikipedia.org/wiki/The_Great_British_Cheese_Festival#History)']"

}

]

Generating Messages for the LLM

1def get_messages(question, rag_results):

2 messages = [

3 {"role": "system", "content": system_prompt},

4 {"role": "user",

5 "content": f"User question: {question}. Retrieved documents: {format_rag_results(rag_results)}"}

6 ]

7 return messages

This function prepares the input for the LLM, combining the system prompt, user question, and the retrieved documents.

The integration of these functions creates a seamless flow from the user’s question to the LLM’s informed response, enabling CheeseGPT to provide expert-level insights into the world of cheese.

Putting it all together might look something like…

1question = "what is the biggest cheese sporting event"

2results = get_results(rds, question, k=3)

3messages = get_messages(question, results)

4print(json.dumps(messages, indent=4))

5response = openai.ChatCompletion.create(

6 model="gpt-4",

7 messages=messages,

8)

9print(response)

Outcomes

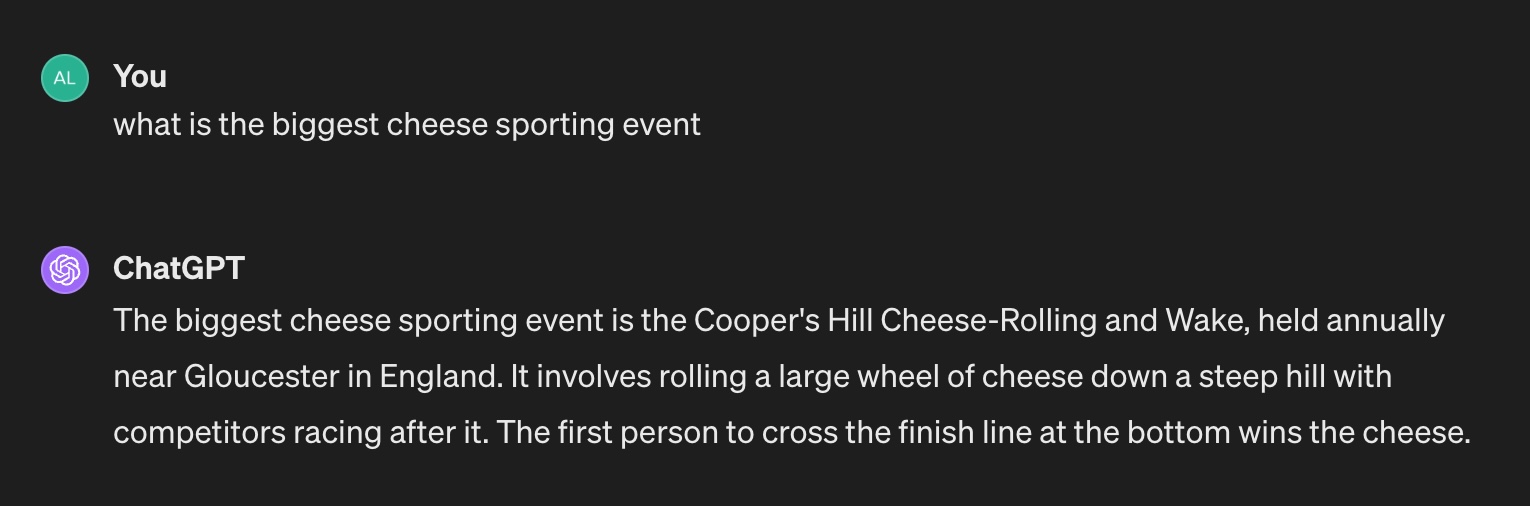

So, let’s compare a question using our system vs. asking ChatGPT. We’ll use the same question above.

Using our system, we get this response:

1The Lucerne Cheese Festival in Switzerland and the International Cheese Awards are two of the biggest cheese sporting events.

2

3The Lucerne Cheese Festival, a yearly event in Lucerne, Switzerland, features the largest cheese market in central Switzerland

4and provides a vast selection of cheeses. The festival also includes live cheesemaking demonstrations, a milking competition,

5and music. In past years, it has presented over 200 varieties of cheese over 23 market stalls, including goat and sheep cheese.

6The festival attracted around 5,800 attendees in 2020, despite social distancing restrictions. The India Times recognized it

7as one of 10 world food festivals for foodies in 2014 [^1^].

8

9On the other hand, the International Cheese Awards allow visitors

10to sample and purchase over 4,600 different cheeses in the Cheese Pavilion. The show features various attractions such as

11cheese making demonstrations, trophy presentations, and live cookery demonstrations [^2^].

12

13The Great British Cheese Festival, although not as large as the other two events, is also a notable cheese event. It

14hosts the British Cheese Awards, where the best cheeses are awarded bronze, silver, and gold medals, judged by food

15experts and farmers. All cheeses are tasted blindly, and the winners can display their awards during the festival.

16The festival typically includes seminars, masterclasses, and cheesemaking demonstrations [^3^].

17

18In conclusion, both the Lucerne Cheese Festival and the International Cheese Awards are considered some of the biggest

19cheese sporting events worldwide.

20

21[^1^]: (https://en.wikipedia.org/wiki/Lucerne_Cheese_Festival#Summary)

22[^2^]: (https://en.wikipedia.org/wiki/International_Cheese_Awards#Show_features)

23[^3^]: (https://en.wikipedia.org/wiki/The_Great_British_Cheese_Festival#History)"

And if we ask ChatGPT the same question…

I’m not sure which of these answer is more correct, and it doesn’t matter for the purposes of this example. The point is that we were

able to retrieve and include our own information, external to the model, and make it use that information in it’s response.

It’s clear how many amazing use cases there are for something like this!

Hopefully this high level toy example was able to shed some light on what a RAG based system may look like. Checkout the additional resources linked before for more in-depth information. Thanks for reading!

Additional resources

https://github.com/ray-project/llm-applications/blob/main/notebooks/rag.ipynb https://github.com/pchunduri6/rag-demystified https://www.anyscale.com/blog/a-comprehensive-guide-for-building-rag-based-llm-applications-part-1